Currently I lead, design and execute user research for Android Wear, Google's wearables platform. I do research for Wear's software (system UI, out-of-box experience, apps andgestures) and hardware. Additionally I conduct strategic and formative research, including large-scale dogfooding and field studies, that helps inform product direction. (Many details confidential, inquire for more info.)

I led user research for material design across Google, coordinating UXers and research across the company. (Many details confidential, inquire for more info.)

I conducted research for personal search projects on the Search team at Google, including Search plus Your World and "my" queries such as [my flights]. (Many details confidential, inquire for more info.)

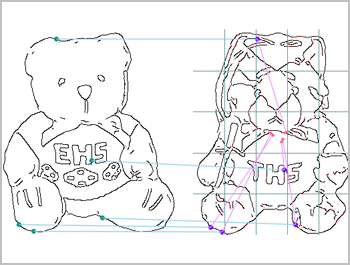

We created an engaging, interative tutorial system for guided sketching and application learning.

My Masters research focused on building and evaluating a multi-touch tabletop application to assist Landscape Architects in the School of Architecture and Landscape Architecture at UBC in their sustainable urban planning process.

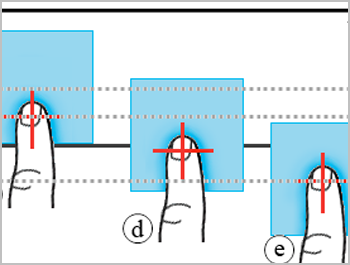

We developed a new snapping technique, Oh Snap, designed specifically for direct touch interfaces. Oh Snap allows users to easily align digital objects with lines or other objects using 1-D or 2-D translation or rotation.

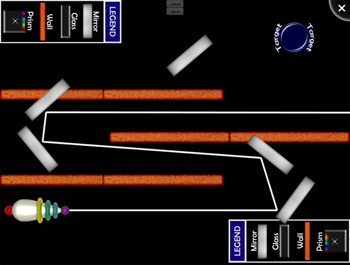

Winning entry for the Interactive Tabletops and Surfaces 2009 conference competition. The submissions were educational games for kids that run on a SMART multi-touch table. Laser Light Challenge teaches kids about the properties of light and how it interacts with various types of surfaces. It was also featured by SMART at the 2010 British Education and Training Technology conference.

We introduced melody into the design of rhythmic stimuli to expand the design space. We also designed groups of haptic icons and evaluated their perceived similarity in a user study.

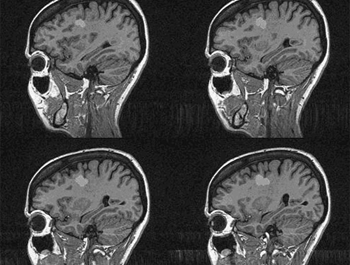

We evaluated the efficiency and accuracy of 3 interaction techniques, including one novel technique, for radiologists scrolling through stacks of MRI images.

This project utilized computer vision techniques to attempt to recognize and label constellations and other stellar bodies in photographs taken with off-the-shelf digital cameras.

Images were processed and decomposed into Voronoi diagrams as a non-photorealistic rendering technique.

Content aware image searching performed via keywords and a query image that utilized representative shape contexts to measure image similarity.